Had some time to deep dive into GraphX and as it is only supported in Scala, was a good experience. Coming from experimenting on graph databases, graphx seemed an easy platform and makes one not worry about sharding/partitioning your data. The

graphx paper and

bob ducharme's blog seemed good starters to getting started with playing on graphx.

So I managed to get all Indian movie data for 10 different languages(Hindi, Marathi, Punjabi, Telugu, Tamil, Bhojpuri, Kannada, Gujarati, Bengali, Malayalam) with the actors and directors associated with the films.

Now came the part of building the relationships between the above entities. GraphX uses VertexId associated with each vertex in the graph which is Long(64 bit). So I went about creating the same for all entities and replacing them in the movie file for easier parsing later when creating edges(relationships). The essential part which makes GraphX super easy and super fun is that the partitioning takes place on vertices,i.e., the vertices go along with the edge when edges are on a different node but the VertexID associated with that vertex will still be the same as any other node. The essential syntax to consider is -

For Vertex -> RDD[(VertexId, (VertexObject))

In my case, the vertex RDD is -> RDD[(VertexId, (String, String))

For Edge -> Edge(srcVertexId, dstVertexId, "<edgeValueString>")

You can also have a URI or other data types as your edgeValue(can be a weight to associate to the edge when you happen to be choosing the destination to choose based on edge value).

These two when combined together in this line create the graph object for us -

val graph = Graph(entities,relationships)

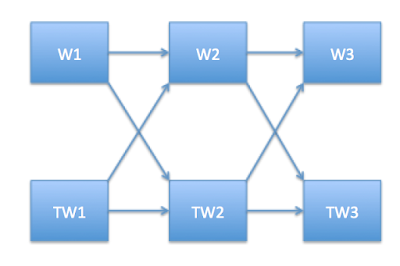

Graph depiction of data

So, I ended up connecting a movie to all its actors and back, its director and back and the language the movie was in and back. This led to some half a million edges for my graph(in essence half of them are edges but I made two edges between all sets of entities and movie). Also, I added literal properties to the movie(which are not nodes) such as imdbRating and potentialAction(webUrl of Imdb for that movie). Literal properties can also be thought of adding to the vertexObject directly if the Object is a class with the properties as different variables. The code to construct and query the graph is below. The main program can be replaced by a scalatra webserver and be used for the same but I was more focused on the graph functionality.

New Document

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.SparkContext._

import org.apache.spark.graphx._

import org.apache.spark.rdd.RDD

import scala.io.Source

import org.json4s._

import org.json4s.jackson.JsonMethods._

import scala.collection.mutable.ListBuffer

object MoviePropertyGraph {

val baseURI = "http://kb.rootchutney.com/propertygraph#"

class InitGraph(val entities:RDD[(VertexId, (String, String))], val relationships:RDD[Edge[String]],

val literalAttributes:RDD[(Long,(String,Any))]){

val graph = Graph(entities,relationships)

def getPropertyTriangles(): Unit ={

// Output object property triples

graph.triplets.foreach( t => println(

s"<$baseURI${t.srcAttr._1}> <$baseURI${t.attr}> <$baseURI${t.dstAttr._1}> ."

))

}

def getLiteralPropertyTriples(): Unit = {

entities.foreach(t => println(

s"""<$baseURI${t._2._1}> <${baseURI}role> \"${t._2._2}\" ."""

))

}

def getConnectedComponents(): Unit = {

val connectedComponents = graph.connectedComponents()

graph.vertices.leftJoin(connectedComponents.vertices) {

case(id, entity, component) => s"${entity._1} is in component ${component.get}"

}.foreach{ case(id, str) => println(str) }

}

def getMoviesOfActor(): Unit = {

println("Enter name of actor:")

val actor = Console.readLine()

graph.triplets.filter(triplet => (triplet.srcAttr._1.equals(actor)) && triplet.attr.equals("inFilm"))

.foreach(t => println(t.dstAttr._1))

}

def getMoviesOfDirector(): Unit = {

println("Enter name of director:")

val director = Console.readLine()

graph.triplets.filter(triplet => (triplet.srcAttr._1.equals(director)) && triplet.attr.equals("movie"))

.foreach(t => println(t.dstAttr._1))

}

def getMoviesInLanguage(): Unit = {

println("Enter language:")

val lang = Console.readLine()

graph.triplets.filter(triplet => (triplet.dstAttr._1.equals(lang)) && triplet.attr.equals("inLanguage"))

.foreach(t => println(t.srcAttr._1))

}

def getTopMoviesBeginningWith(): Unit = {

println("Enter prefix/starting tokens:")

val prefix = Console.readLine()

graph.vertices.filter(vertex => (vertex._2._1.startsWith(prefix)) && vertex._2._2.equals

("movie"))

.distinct()

.leftOuterJoin(literalAttributes.filter(literalTriple => (literalTriple._2._1.equals("imdbRating"))))

.sortBy(element => element._2._2.get._2.asInstanceOf[Double], false)

.foreach(t => println(t._2._1 +" " + t._2._2.get._2.asInstanceOf[Double]))

}

def getActorsInMovie(): Unit = {

println("Enter movie:")

val movie = Console.readLine()

graph.triplets.filter(triplet => (triplet.srcAttr._1.equals(movie)) && triplet.attr.equals("actor"))

.foreach(t => println(t.dstAttr._1))

}

}

def main(args: Array[String]) {

val sc = new SparkContext("local", "MoviePropertyGraph", "127.0.0.1")

val movieFile = ""

val actorFile = ""

val directorFile = ""

val languageFile = ""

val movies = readJsonsFile(movieFile, "movie", sc)

val actors = readCsvFile(actorFile, "actor", sc)

val directors = readCsvFile(directorFile, "director", sc)

val languages = readCsvFile(languageFile, "language", sc)

val entities = movies.union(actors).union(directors).union(languages)

val relationships: RDD[Edge[String]] = readJsonsFileForEdges(movieFile, sc)

val literalAttributes = readJsonsFileForLiteralProperties(movieFile, sc)

val graph = new InitGraph(entities, relationships, literalAttributes)

// Some supported functionality in graphx

// graph.getPropertyTriangles()

// graph.getLiteralPropertyTriples()

// graph.getConnectedComponents()

var input = 0

do {

println("1. Movies of actor \n2. Movies of director \n3. Movies in language \n4. Top Movies beginning with " +

"\n5. Actors in movie \n6. Exit\n")

input = Console.readInt()

input match {

case 1 => graph.getMoviesOfActor()

case 2 => graph.getMoviesOfDirector()

case 3 => graph.getMoviesInLanguage()

case 4 => graph.getTopMoviesBeginningWith()

case 5 => graph.getActorsInMovie()

case 6 => "bye !!!"

case something => println("Unexpected case: " + something.toString)

}

} while (input != 6);

sc.stop

}

def readJsonsFile(filename: String, entityType: String, sparkContext:SparkContext): RDD[(VertexId, (String, String))] = {

try {

val entityBuffer = new ListBuffer[(Long,(String,String))]

for (line <- case="" catch="" classof="" entitybuffer.append="" entitybuffer="" entitytype="" ex:="" exception="" filename="" getlines="" head.tolong="" head="" jsondocument="" movieid="" name="" return="" source.fromfile="" sparkcontext.parallelize="" tring="" val=""> println("Exception in reading file:" + filename + ex.getLocalizedMessage)

return sparkContext.emptyRDD

}

}

def readCsvFile(filename: String, entityType: String, sparkContext:SparkContext): RDD[(VertexId, (String, String))] = {

try {

val entityBuffer = new ListBuffer[(Long,(String,String))]

for (line <- -="" .map="" .split="" 1="" array="" case="" entitybuffer.appendall="" filename="" getlines="" k="" line.length="" line.substring="" source.fromfile="" split="" v=""> (k.substring(2, k.length-1), v.substring(1, v.length).toLong)}

.map { case(k,v) => (v,(k,entityType))})

}

return sparkContext.parallelize(entityBuffer)

} catch {

case ex: Exception => println("Exception in reading file:" + filename + ex.getLocalizedMessage)

return sparkContext.emptyRDD

}

}

def readJsonsFileForEdges(filename: String, sparkContext:SparkContext): RDD[Edge[String]] = {

try {

val relationshipBuffer = new ListBuffer[Edge[String]]

for (line <- actor.tolong="" actor="" actors="" case="" catch="" classof="" dge="" director="" ex:="" exception="" filename="" for="" getlines="" head.tolong="" infilm="" inlanguage="" jsondocument="" movie="" movieid="" nt="" println="" relationshipbuffer.append="" relationshiprdd.count="" relationshiprdd="" return="" source.fromfile="" tring="" val=""> println("Exception in reading file:" + filename + ex.getLocalizedMessage)

return sparkContext.emptyRDD

}

}

def readJsonsFileForLiteralProperties(filename: String, sparkContext:SparkContext): RDD[(Long,(String,Any))] = {

try {

val literalsBuffer = new ListBuffer[(Long,(String,Any))]

for (line <- case="" catch="" classof="" ex:="" exception="" filename="" getlines="" head.tolong="" head="" imdbrating="" jsondocument="parse(line)" literalsbuffer.append="" literalsbuffer="" movieid="" ouble="" potentialaction="" return="" source.fromfile="" sparkcontext.parallelize="" tring="" val=""> println("Exception in reading file:" + filename + ex.getLocalizedMessage)

return sparkContext.emptyRDD

}

}

}

The function "getTopMoviesBeginningWith" is the coolest of all functions as it sorts the entities based on literal properties(imdb) joined into the same RDD and provides a lame autosuggest for movies starting with a prefix.

My actors, director and languages files were in the form of csv containing name and vertexID values that I had pre-generated.

For example,

'shah rukh khan': 75571848106

'subhash ghai': 69540999294

'hindi': 10036785003, 'telugu': 61331709614

My movie file was in the form of a json containing all this connected data. For example -

{"inLanguage": 10036785003,

"director": 52308324411,

"movieId": "0112870",

"potentialAction": "http://www.imdb.com/title/tt0112870/",

"name": "dilwale dulhania le jayenge",

"imdbRating": 8.3,

"actors": [75571848106, 19194912859, 79050811553, 37409465222, 44878963029, 10439885041, 85959578654, 31779460606, 39613049947, 29336046116, 64948799477, 71910110134, 1911950262, 19092081589, 77693233734]

}

If you happen to be needing these files, do let me know and I can email them. Last but not the least, I found building my project using SBT really easier than non SBT method as it gave a gradle like method to build projects.

Here is my sample sdt file that I used to build the project -

name := "PropertyGraph"

version := "1.0"

scalaVersion := "2.10.4"

connectInput in run := true

// additional libraries

libraryDependencies ++= Seq(

"org.apache.spark" %% "spark-core" % "1.5.0" % "provided",

"org.apache.spark" %% "spark-sql" % "1.5.0",

"org.apache.spark" %% "spark-hive" % "1.5.0",

"org.apache.spark" %% "spark-streaming" % "1.5.0",

"org.apache.spark" %% "spark-streaming-kafka" % "1.5.0",

"org.apache.spark" %% "spark-streaming-flume" % "1.5.0",

"org.apache.spark" %% "spark-mllib" % "1.5.0",

"org.apache.spark" %% "spark-graphx" % "1.5.0",

"org.apache.commons" %% "commons-lang3" % "3.0",

"org.eclipse.jetty" %% "jetty-client" % "8.1.14.v20131031",

"org.json4s" %% "json4s-native" % "{latestVersion}",

"org.json4s" %% "json4s-jackson" % "{latestVersion}"

)

The experimentation did not give me a real time computation(like Neo4j or Virtuoso graph databases) of the graph as spark processing takes time on a single spark node and I safely assume increasing number of nodes will increase the time due to parallel distribution and fetching. I hope this continues to get better with future versions of spark(soon to be out 1.6 !!)

No Fox too fast, No Fence too high....